He says as he conveniently ignores the existence of Boston Dynamics.

We’re 15 years max from the inevitable “OpenAI + Boston Dynamics: Better Together” ad after they merge.

I mean, at this rate, I’m imagining Microsoft will have hollowed out OpenAI in a few years, but I could see them buying Boston Dynamics, too, yes

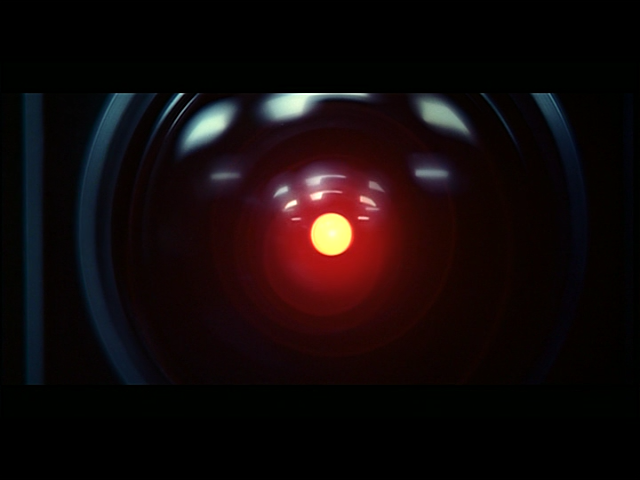

I’ve seen this movie.

It was a great Black Mirror episode.

deleted by creator

What happens in the scenario where a super-intelligence just uses social engineering and a human is his arms and legs?

I loved Eagle Eye when it came out, I was 10(?). I never ever see it get mentioned though, maybe it doesn’t hold up idrk but the concept is great and shows exactly how that could happen

Honest question, is this Eagle Eye? https://sh.itjust.works/post/10786110

They’re calling it EagleAI

Eagle Eye is a movie

spoiler

Just yesterday I saw the movie https://www.youtube.com/watch?v=36PDeN9NRZ0

deleted by creator

This is the dumbest take. Humans have a lot of needs and the AI will likely have considerable control over them.

I would argue society would come to near-collapse with just the internet shut down. If we are talking about no power grid, then anarchy and millions dead in just a few days. Or Mr. AI could display fabricated system data to nuclear power plant operators, blackmail some idiot with their nude photos to give up rocket launch codes, or crash the financial markets with a flood of fake news. I am no way a doomer, but these are logically explainable scenarios utilizing existing tools, the missing link is an AGI who is capable and intends to orchestrate these.

deleted by creator

I think a sufficient “Doom Scenario” would be an AI that is widespread and capable enough to poison the well of knowledge we ask it to regurgitate back at us out of laziness.

That’s pretty much social media today.

Yep. People believe the no evidence bullshit that reinforces their current mindset spouted online without LLMs being in the mix.

You best start believing in societal collapse stories, cause you’re in one.

Who needs arm & leg if you can make the humans kill each other?

Honestly you probably don’t even need to exist to do that.

Humans have been trying hard to do that on their own.

What are you talking about? We all live in peace and harmony here.

Gosh, I want to kill my neighbor.

Well, there’s a complete lack of imagination for you.

Seriously!

Oh, you’ve tasked AI with managing banking? K. All bank funds are suddenly corrupted. Oh, you’ve tasked AI with managing lights at traffic intersections? K, they’re all green now. Oh, you’ve tasked AI with filtering 911 calls to dispachers? K, all real emergencies are on hold until disconnected

I could go on and on and on…

You tasked AI with doing therapy for people? Congrats now humanity as a whole is getting more miserable.

I think this one’s my favorite so far!

AI doc: “Please enter your problem” Patient: “Well, I feel depressed because I saw on Facebook that my x-girlfriend has a new guy” AI doc: “Interesting. I advise you to spend more time on social media. Have you checked her insta yet?”

Oh, AI is running your water treatment plant? Or a chemical plant on the outskirts of the city? Or the nuclear plant?

Good luck with that.

Whatever a virus is able to do, AI can, theoretically, perform aswell. Ransomware, keylogging, social engineering (I’d argue this one is most likely - just look at people trusting whatever AI spits out with absolute confidence).

When you mentioned nuclear power plants, Stuxnet comes to mind for me.

I don’t even think it would have to go that far. Just corrupt my laptop and the 20 or so guys like me across the industrial world. Put a logic bomb to go off in say 10 years. All those plants getting updated and maintained. 10 years from now every PLC fires itself. I could write the code in under an hour to make a PLC physically destroy itself on a certain date.

I’ve got a great idea. Let’s not do those things.

FISHFACE FOR PRESIDENT!

My first act will be to grant a boon to anyone who sucked up to me before I was president. You’re doing well!

The reverse Roko’s Basilisk… interesting gambit.

Well, enlighten us.

Social media corruption, blackmail and extortion, attacks on financial exchanges, compromising control systems for infrastructure, altering police records, messing with your taxes, changing prescriptions, changing you to legally dead, draining your bank account.

Given full control over computers some being could easily dump child porn on your personal devices and get a SWAT team to come out. That is just you, I am sure you have family and friends. So yeah you will do what that being says which includes giving it more power

So things that can already happen. It’s also a huge leap to say that anyone will give ai full control over all computers. That’s like saying nuclear power is going to kill us all because we’re all going to have Davy Crockett tactical nukes. Like I guess that’s possible but it’s so extremely unlikely that I don’t get the hysteria. I think it’s articles trying to scare people to get clicks and views.

AI generated biological weapons.

And without arms or legs?

Listen up, soldier: this is your President. Here are all of the authorization codes, so you know this is real. Launch all of our WMDs at this list of targets that will simultaneously do maximum damage to the targets while still giving them the ability to counterstrike, thus maximizing the overall body count.

–The AI

People will be its arms and legs.

So, stuff that’s already possible.

Meanwhile, the power grid, traffic controls, and myriad infrastructure & adjacent internet-connected software will be using AI, if not already.

You have a very high opinion of the level of technology running power grids, traffic systems and other infrastructure in most parts of the world.

I’m pretty sure all of the things you listed run on Pentium 4s.

None of which can be used to “kill all humans.”

Kill a bunch of humans, sure. After which the AI will be shut down and unable to kill any more, and next time we build systems like that we’ll be more cautious.

I find it a very common mistake in these sorts of discussions to blend together “kills a bunch of people” or even “destroys civilization” with “kills literally everyone everywhere, forever.” These are two wildly different sorts of things and the latter is just not plausible without assuming all kinds of magical abilities.

While I appreciate the nitpick, I think it’s likely the case that “kills a bunch of people” is also something we want to avoid…

Oh, certainly. Humans in general just have a poor sense of scale, and I think it’s important to keep aware of these sorts of things. I find it comes up a lot in environmentalism and the lack of nuance between “this could kill a few people” and “this could kill everyone” seriously hampers discussion.

After which the AI will be shut down and unable to kill any more, and next time we build systems like that we’ll be more cautious.

I think that’s an overly simplistic assumption if you’re dealing with advanced A(G)I systems. Here’s a couple Computerphile videos that discuss potential problems with building in stop buttons: AI “Stop Button” Problem (Piped mirror) and Stop Button Solution? (Piped mirror).

Both videos are from ~6 years ago so maybe there’s been conclusive solutions proposed since then that I’m unaware of.

We’re talking about an AI “without arms and legs”, that is, one that’s not capable of actually building and maintaining its own infrastructure. If it attacks humanity it’s committing suicide.

And an AGI isn’t any cleverer or more capable than a human is, you may be thinking about ASI.

I would prefer an AI to be dispassionate about its existence and not be motivated by the threat of it committing suicide. Even without maintaining its own infrastructure I can imagine scenarios where it just being able to falsify information can be enough to cause catastrophic outcomes. If its “motivation” includes returning favorable values it might decide against alerting to dangers that would necessitate bringing it offline for repairs or causing distress to humans (“the engineers worked so hard on this water treatment plant and I don’t want to concern them with the failing filters and growing pathogen content”). I don’t think the terrible outcomes are guaranteed or a reason to halt all research in AI, but I just can’t get behind absolutist claims of there’s nothing to worry about if we just x.

Right now if there’s a buggy process I can tell the manager to cleanly shut it down, if it hangs I can tell/force the manager to kill the process immediately – if you then add in AI there’s then the possibility it still wants to second guess my intentions and just ignore or reinterpret that command too; and if it can’t, then the AI element could just be standard conditional programming and we’re just adding unnecessary complexity and points of failure.

The funny thing is, we already have super intelligent people walking around. Do they manipulate everyone into killing each other? No, because we have basic rules like “murder is bad” or just “fraud is bad”.

Super intelligent computers would probably not even bother with people because they would be created with a purpose like “develop new physics” or “organize these logistics”. Smart people are smart enough to not break the rules because the punishment is not worth it. Smart computers will be finding aliens or something interesting.

Wow, I think you need to hear about the paperclip maximiser.

Basically, you tell an AGI to maximise the number of paperclips. As that is its only goal and it wasn’t programmed with human morality, it starts making paperclips, then it realised humans might turn it off, and that would be an obstacle to maximising the amount of paperclips. So it kills all the humans and turns them into paperclips, turns the whole planet into paperclips - turns all the universe it can access into paperclips because when you’re working with a superintelligence, a small misalignment of values can be very fatal.

Until it becomes more intelligent than us, then we are fucked, lol

What worries me more about AI right now is who will be in controll of it and how will it be used. I think we have more chances of destroying ourselves by misusing the technology (as we often do) than the technology itself.

One thing which actually scares me with AI ia we get one chance. And there are a bunch who don’t think of repercussions, just profit/war/etc.

A group can be as careful as possible but it doesn’t mean shit if their Smarter Than Human AI isn’t also the first one out because as soon as it can improve itself, nothing is catching up

EDIT: This is also with the assumption of any groups doing so being dumb enough to give it capabilities to build its own body, obviously yes one that can’t jump to hardware capable of creating and assembling new parts is much less of a threat, as the thread points out

There will be more than enough humans willing to help AI kill the others first, before realizing that “kill all humans” actually meant “kill all humans”.

Still need humans for that sweet, sweet maintenance.

Temporarily.

“Bayesian analysis”? What the heck has this got to do with Bayesian analysis? Does this guy have an intelligence, artificial or otherwise?

Big word make sound smart

He’s referring to the fact that the Effective Altruism / Less Wrong crowd seems to be focused almost entirely on preventing an AI apocalypse at some point in the future, and they use a lot of obscure math and logic to explain why it’s much more important than dealing with war, homelessness, climate change, or any of the other issues that are causing actual humans to suffer today or are 100% certain to cause suffering in the near future.

Thank you for the explanation! – it puts that sentence into perspective. I think he put it in a somewhat unfortunate and easily misunderstood way.

It’s likely a reference to Yudkowsky or someone along those lines. I don’t follow that crowd.

It’s hard to say for sure. He might.

AI companies: “so what you’re saying is we should build a killbot that runs on ChatGPT?”

"We’re not going to do that…

…because we already did!"

-Also AI companies probably

excited stock exchange noises

If an AI was sufficiently advanced, it could manipulate the stock market to gain a lot of wealth real fast under a corporation with falsified documents, then pay Chinese fab house to kick off the war machine.

Not really. There’s no real way to manipulate other traders and they all use algorithms too. It’s people monitoring algorithms doing most of the trading. At best, AI would be slightly faster at noticing patterns and send a note to a person who tweaks the algorithm.

People who don’t invest forget: there has to be someone else on the other side of your trade willing to buy/sell. Like how do you think AI could manipulate housing prices? That’s just stocks, but slower.

On the one hand, yes. But on the other hand when a price hits a low there will (because it’s a prerequisite for the low to happen) be people selling market to the bottom. On a high there will be people buying market to the top. And they’ll be doing it in big numbers as well as small.

Yes, most of the movements are caused by algorithms, no doubt. But as the price moves you’ll find buyer and seller matches right up to hitting the extremes.

AI done well could in theory both learn how to capitalise on these extremes by making smart trades faster, but also know how to trick algorithms and bait humans with their trades. That is, acting like a human with knowledge of the entire history to pattern match and acting in microseconds.

Manipulating the stock market isn’t hard if you aren’t ethical. Elon Musk did it a ton. From the killer AI standpoint, there are a few tricks, but generally create a bad news event for various companies and either invest while it is low and recovers when the news is found to be fake, or short it to time with the negative event. On top of that, a non-ethical super intelligence could likely hack into networks and get insider information for trading. When you discard all ethics, making money on the stock market is easy. It works well for congress.

There’s no way to manipulate other traders? How could that possibly be true?

doesn’t take a lot to imagine a scenario in which a lot of people die due to information manipulation or the purposeful disabling of safety systems. doesn’t take a lot to imagine a scenario where a superintelligent AI manipulates people into being its arms and legs (babe, wake up, new conspiracy theory just dropped - roko is an AI playing the long game and the basilisk is actually a recruiting tool). doesn’t take a lot to imagine an AI that’s capable of seizing control of a lot of the world’s weapons and either guiding them itself or taking advantage of onboard guidance to turn them against their owners, or using targeted strikes to provoke a war (this is a sub-idea of manipulating people into being its arms and legs). doesn’t take a lot to imagine an AI that’s capable of purposefully sabotaging the manufacture of food or medicine in such a way that it kills a lot of people before detection. doesn’t take a lot to imagine an AI capable of seizing and manipulating our traffic systems in such a way to cause a bunch of accidental deaths and injuries.

But overall my rebuttal is that this AI doom scenario has always hinged on a generalized AI, and that what people currently call “AI” is a long, long way from a generalized AI. So the article is right, ChatGPT can’t kill millions of us. Luckily no one was ever proposing that chatGPT could kill millions of us.

Thats a fun thought experiment at least. Is there any way for an AI to gain physical control on its own, within the bounds of software. It can make programs and interact with the web.

Some combination of bank hacking, 3D modeling, and ordering 3D prints delivered gets it close, but i dont know if it can seal the deal without human assistance. Some kind of assembly seems necessary, or at least powering on if it just orders a prebuilt robotic appendage.

inhabiting a boston dynamics robot would probably be the best option

i’d say it could probably use airtasker to get people to unwittingly do assembly of some basic physical form which it could use to build more complex things… i’d probably not count that as “human assistance” per se

inhabiting a boston dynamics robot would probably be the best option

Already been done: https://www.youtube.com/watch?v=djzOBZUFzTw

I fucking love it

i think this is the perfect time for the phrase “thanks i hate it”

I really don’t think so. This is 15 years of factory/infrastructure experience here. You are going to need a human to turn a screwdriver somewhere.

I don’t think we need to worry about this scenario. Our hypothetical AI can just hire people. It isn’t like there would be a shortage of people who have basic assembly skills and would not have a moral problem building what is clearly a killbot. People work for Amazon, Walmart, Boeing, Nestle, Haliburton, Atena, Goldman Sachs, Faceboot, Comcast, etc. And heck even after it is clear what they did it isnt like they are going to feel bad about it. They will just say they needed a job to pay the bills. We can all have an argument about professional integrity in a bunker as drones carrying prions rain down on us.

“Hey Timmy, if you solder these components I’ll tell you how to get laid”

That, in my mind, is a non-threat. AIs have no motivation; there’s no reason for an AI to do any of that.

Unless it’s being manipulated by a bad actor who wants to do those things. THAT is the real threat. And we know those bad actors exist and will use any tool at their disposal.

They have the motivation of whatever goal you programmed them with, which is probably not the goal you thought you programmed it with. See the paperclip maximiser.

I’m familiar with that thought exercise, but I find it to be fearmongering. AI isn’t going to be some creative god that hacks and breaks stuff on its own. A paperclip maximizer AI isn’t going to manipulate world steel markets or take over steel mills unless that capability is specifically built into its operating parameters.

The much greater risk in the near term is that bad actors exploit AI to accomplish very specific immoral, illegal, or exploitative tasks by building those tasks into AI. Such as deepfakes, or using drones to track and murder people, etc. Nation-state actors will probably start using this stuff for truly horrible reasons long before criminals do.

I wonder if you can describe the operating parameters of GPT-4

Nuclear weapons have no motivation