Welcome to today’s daily kōrero!

Anyone can make the thread, first in first served. If you are here on a day and there’s no daily thread, feel free to create it!

Anyway, it’s just a chance to talk about your day, what you have planned, what you have done, etc.

So, how’s it going?

So I’m still not feeling great, but getting a bit more energy now. Spent the weekend doing not much. Must have had whatever @eagleeyedtiger@lemmy.nz and @flambonkscious@sh.itjust.works had 🙁

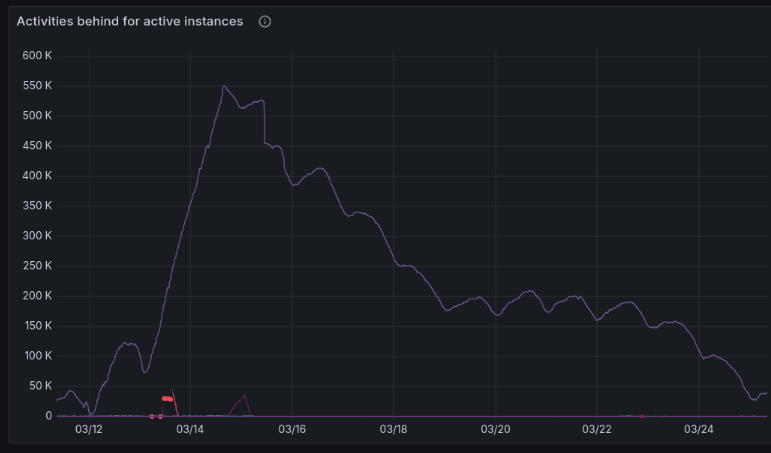

On the plus side, we are looking a lot healthier on the federation front with Lemmy.world. It seems we can’t make much progress with weekday traffic but when the weekend comes there’s a lot less content and so we can catch up some more. We are currently down to about 2 hours delay for content coming from Lemmy.world to Lemmy.nz. Here’s a graph:

And here’s one showing the number of “activities” (actions) behind we are:

Sorry for having one graph weirdly thin and tall, they are on the same timeline, but they seem to display different. I didn’t make the graphs, but they are publicly accessible here and here.

These are pretty neat graphs! Is it sourced from the Prometheus logs?

Just updated to 0.19.3 but the DB migrations failed due to a permissions change I made a while back to my DB, so I had to spend a few hours in the SQL dungeons fixing things.

I didn’t make the dashboards, but the data is available through an API call to each instance. I guess they have some polling set up and then feed it into the dashboard?

I’ve been testing some stuff with pictrs, and have been updating pictrs from 0.4 to 0.5 in my non-prod instance. There’s no progress meter and so far it has taken 2 days 😱.

It’s a lot less grunty than the production server but still seems excessive!

Interesting. I have some New Relic stuff setup with my cluster but most of that is just resource usage stuff. I ran out of RAM a while back so I’ve had to be a bit more restrictive about how many connections Lemmy can have to postgres db.

There’s no progress meter and so far it has taken 2 days 😱.

Uh oh. I considered updating to 0.5 as part of my 0.18.3-ish (I was running a custom fork I made with some image caching stuff that has since been merged in to real lemmy) -> 0.19.3 upgrade but I’m glad I didn’t.

Thanks for the heads up. Are you migrating to postgres for pictrs too, or sticking with sled?

I ran out of RAM a while back so I’ve had to be a bit more restrictive about how many connections Lemmy can have to postgres db.

I just have a cronjob to restart the backend lemmy container every night 😆

Thanks for the heads up. Are you migrating to postgres for pictrs too, or sticking with sled?

My plan is to go to postgres but this migration is just for sled. I was doing it for another reason, to test out a cache cleaning setup. Currently the pictrs image cache is 250-300gb because it’s never deleted anything (because lemmy doesn’t do that).

Lemmy.world said it took them 4 hours, and they have a grunty machine. Not sure what their cache looked like, though. I think they were also moving to postgres.

cronjob to restart the backend lemmy container

Fair enough, that’d work. I run my database in a different pod to lemmy (I run this all in kubernetes), and I cannot restart that pod without causing an outage for a bunch of other things like my personal website. I ended up just needing to tune my config to have a maximum RAM usage and then configuring k8s to request that much RAM for the DB pod, so it always has the resources it needs.

pictrs image cache is 250-300gb

oof :(

That’s what my custom lemmy patch was, it turned off pictrs caching. That’s now in lemmy as a config flag (currently a boolean but in 0.20 it will be on/off/proxy where the proxy option goes via your pictrs but does not cache). I then went back through mine and did a bunch of SQL to figure out which pictrs images I could safely delete and got my cache down to 3GB.I’m not using kubernetes and know nothing about it, but I don’t need to restart postgres, only the ‘lemmy’ container that runs the lemmy backend. By doing this the connections are all severed, the RAM is freed up, and it’s all good again. I should probably learn how to limit connections in another way!

Instead of doing all the working out about pictrs images, I’m just looking at using this: https://github.com/wereii/lemmy-thumbnail-cleaner

An added benefit being that it stays running and keeps your cache trimmed to the timeframe you state. I’m happy with a cache but after a week it’s not really that helpful. Unfortunately the endpoint in pictrs that deletes the image and removed from the db that this script uses is not in pictrs 0.4.x so I thought I’d quickly run the upgrade in non-prod and test it out. It’s still running, I started it about lunchtime on Saturday! I’m seriously considering pulling the plug and doing it properly into postgres, but it would be nice to know how long it’s gonna take, so I’m also tempted to leave it running. It’s running on an old Vaio laptop set up as a server. I think this machine is older than I first thought, perhaps from 2012, so that might explain a lot!

By doing this the connections are all severed, the RAM is freed up, and it’s all good again.

Ah, neat! I didn’t think of that. You can limit the size of the connection pool in your lemmy config fwiw.

Nice, that looks like it’s doing a similar thing to my weird mess of SQL and Python that I did last year haha

Good luck for the migration :)

Here’s some pictures of my pet Leo!

Leo at home

Leo at feeding time

He’s a big boy, pushing 3 inches long! He’s been my pet every since earlier today when I found him in my worm farm.

I know these guys eat other slugs, but I cannot express how much I hate slugs of all types

He has lovely speckles. Quite pretty, if slugs can be pretty.

I knew someone who did think they had a pet tiger slug for about 3 weeks and then the cat ate it.

No cats here, Leo is safe!

I searched up common slugs and found it referred to as a Leopard Slug, though I see now Wikipedia says Tiger Slug is another common name for it. Is Tiger Slug a more common term here?

I also see that my one is only a baby, with adults 10cm-20cm long! Leo is probably about 7cm except when stretched out. I’ll have to keep him well fed and see how big he gets!

Yeah I’ve always known them as tiger slugs. Yes they get pretty big, and dark. He will probably enjoy it if you feed him some kind of wet catfood, they also drink water.

He’s got heaps of soggy food scraps, it seems to be a pretty ideal restaurant for him!

I’m now on my seventh day of covid. I’m about 85% recovered but I except this exhaustion to linger for some time. I didn’t realise how burnt out I was from work until I was forced to take this time off. So I decided to take advantage of this time off by giving myself more time off lol. A extra week of recovery and fun! I’m gonna catch up with some old friends, get a hair cut, do my studies, attend toastmasters and volunteer at the market at the end of the week.

Sounds great, it’s best to really avoid pushing it when you’re recovering. Have a fun week!

Oh man, an extra week off sounds great! Sorry to hear you’ve got COVID. Enjoy your time off!

SO MANY PEACHES. I’m going to run out of space in the freezer at this rate.

Nice! Peaches are one of my favourite stone fruit. Though that might not be the case if I had far too many!

Hiya! Beautiful weekend and now I’m listening to a morepork.

Unfortunately I have something going on with my tooth, dentist tomorrow, not sure what is going to happen or how bad the impact will be on my system and how long recovery is. Hopefully see you all on the other side.

Good luck! Hopefully it won’t be too bad 🙂

Thanks. Good news is they didn’t do it yesterday! Bad news is it’s somewhere in my future, but I’ll cross that bridge when I come to it (again).